7 crucial steps that you have to take in delivering the fastest possible experience to your visitors.

Apart from quality, connectedness, and relevance of content, speed is one of the most crucial factors in the Google page rank.

I just refurbished the site of a friend, who happens to be -as far I am concerned — the best practicing internist in his country. The previous site, also designed by me back in 2016, apart from becoming aesthetically outdated, was becoming overwhelmed with visitors. Five years ago he just wanted a decent site to promote his clinic inside of a major hospital. He had said to me at the time that he plans to start publishing a few articles to help people look after their health. Well, he overdid it! Over the years he has published over 4000 articles and now his site receives about 1,000,000 unique users per year!

As I was working on the site’s statistics, I noticed that while he was toping the charts in some rather obscure keywords as he had unique content, the site was tanking in the most popular searches, in spite of the wealth of content.

The first step in the optimization was to just move it to a faster server and update the (same) theme to its latest version, as it had to be updated since 2016. Indeed there was some improvement on the site’s performance (in terms of popularity) by about 10–15%.

We then decided to go for something more drastic: With so many thousand articles, the previous theme (designed to promote a doctors clinic) was making injustice to the content. So we thought to go for a “News” theme so that we can better showcase our content to the readers.

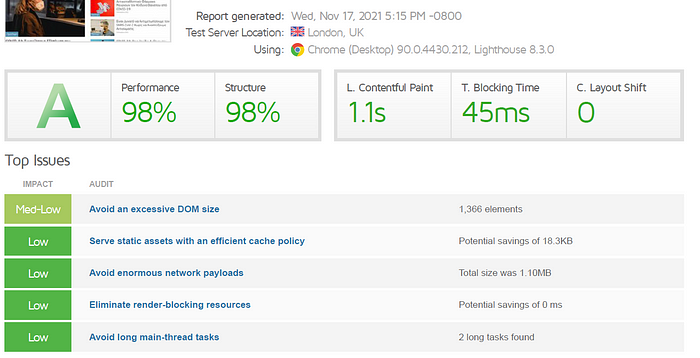

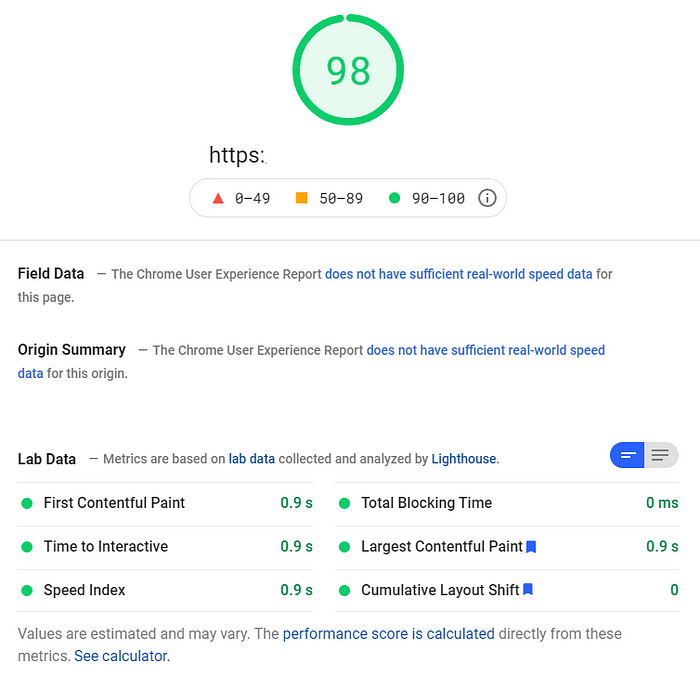

Having optimised the new site, the GTMetrix score now is a mighty 98%!

Why am I writing this?

Many friends have asked me on how this rating was achieved and what they can do in order to make their sites faster.

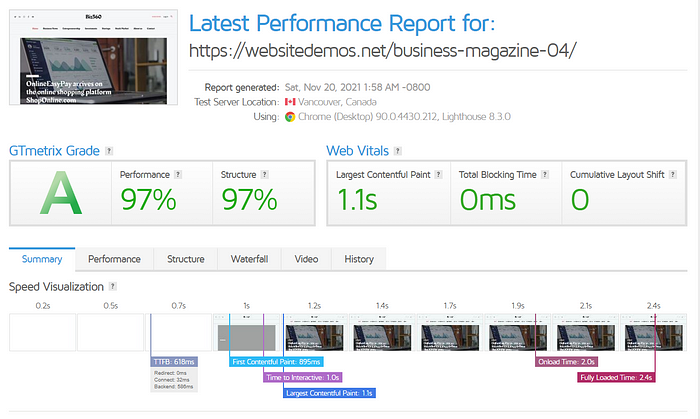

One friend was so impressed that he asked me to see what I can do for his site. I spent a few hours to create a replica of his site to my hosting, do a little bit of “my magic”, and compare the performance of the two.

Here you can see the comparison of performance — for identical theme and content.

So we improved the rendering performance by about 3.2 seconds in a page that was taking 4.1 seconds to start displaying content to the end user.

As it seems that I cannot meet the demand, here are the nitty-gritty on how to achieve this in your site.

Without further ado, let’s go to the steps that you have to take in order to achieve similar results with very little investment.

1. Choose your theme extremely carefully

Before you buy a theme, check the performance of the vendor’s demo.

Go to GTMetrix and see how it ranks. It is unlikely that you will better the manufacturers own optimised demo. But if the demo ranks poorly, the chances are that your own site will rank poorly as well!

Wpbeginner has compiled a list of “the best newspaper” themes.

Lets do some random testing.

Their #1 pick scores:

While the last (by order of appearance) on their list scores:

In my case I opted for TagDiv’s Newspaper theme that, in the template I based my implementation, offered this performance:

I chose TagDiv because I have being using it for the past three years, I had even helped them add custom template functionality (cloning templates) a few years ago, there are lovely guys who love what they are doing, offering great support. The performance of their theme is just indicative of my overall experience.

(Yes I bettered their own demo figure! — keep reading)

2. Remove junk

It is unbelievable how many template-based sites are overloaded with useless stuff only because the developer was too lazy to clean them up from functionality that it is offered by the template vendors but it is useless for your own project.

One of my favourite junk to get rid of is the code of Revolution Slider (about 0.6MB per page altogether) from the pages that there is no slider.

I am using Revolution Slider — the best Slider there is in the market- in the “About us” page, that less than 1% of the visitors see.

But the Revolution Slider code, by default, is included in each and every page!

So I went to line 90 of the wp-content\plugins\revslider\public\revslider-front.class.php

and added and if condition like this:if (is_page(522)) {

…

}

(522 is the id of the page that has the slider)

I also wrote a message to the guys at ThemePunch to complain about their junk! They duly informed me that this is out of the box functionality: You only have to go to the global setting of revolution slider, write the number of the page(s) that your sliders will be present and your are done. I guess, it is one of these features that very few of the users know, never mind put in practice.

Additional ways to remove junk is by adding in the functions.php various instructions that remove unwanted functionality:

e.g. Removing emojis// Removing Emojis

add_action( ‘init’, ‘infophilic_disable_wp_emojicons’ );

function infophilic_disable_wp_emojicons()

{

// all actions related to emojis

remove_action( ‘admin_print_styles’, ‘print_emoji_styles’ );

remove_action( ‘wp_head’, ‘print_emoji_detection_script’, 7 );

remove_action( ‘admin_print_scripts’, ‘print_emoji_detection_script’ );

remove_action( ‘wp_print_styles’, ‘print_emoji_styles’ );

remove_filter( ‘wp_mail’, ‘wp_staticize_emoji_for_email’ );

remove_filter( ‘the_content_feed’, ‘wp_staticize_emoji’ );

remove_filter( ‘comment_text_rss’, ‘wp_staticize_emoji’ );

}

Removing wp-based scripts such as wp-embed that are now covered by the vendors of your themes!// Remove WP embed script

function infophilic_stop_loading_wp_embed() {

if (!is_admin()) {

wp_deregister_script(‘wp-embed’);

}

}

add_action(‘init’, ‘infophilic_stop_loading_wp_embed’);

And many more.

Rest assured that are experts for all major themes that can help you weed out un-necessary code.

It TagDiv’s case please visit this link to get the best optimisation advice for the Newspaper theme — Nice work Amit!

3. Optimise images/fonts/css/javascript

Images:

Utilising webp in your pages will save anything between 40–60% of the traffic for images.

I highly recommend Bjørn Rosell’s WebP Express that works like a gem.

If your server is nginx you may have to do some manual work in order to make it work but it is well worth the effort. Bjørn provides all the necessary instructions!

Fonts:

Google fonts are fine but they are better be served by your own server/CDN rather than requesting them each and every time from Google. Not only you spare yourself from the extra DNS/external requests but you can also preload them so that they are in the user’s browser before the rendering of the page. This approach minimises the page shifts (the movement/tilting of the page as it load initially the default fonts, prior to their replacement with the specified fonts); a key metric in the performance benchmarking of sites.

You can do this as follows:

- You write your own stylesheet in the form:

@font-face {

font-family: “PFDinDisplay-Light”;

src: url(“fonts/PFDinDisplay/PFDinDisplayLight.woff2”) format(“woff2”);

font-style: normal;

font-weight: 200;

font-display: swap;

}

that you place in your child theme.

Then in your functions.php your write a command like this:function dns_prefetch_responsive() {

echo “<link href=’https://yourdomain.com/wp-content/themes/pathology/assets/fonts/PFDinDisplay/PFDinDisplayLight.woff2′ rel=’preload’ as=’font’ type=’font/woff2′ crossorigin>”;

}

add_action( ‘wp_head’, ‘dns_prefetch_responsive’, 0 );

And you are all set!

Just make sure that you are using the latest/most efficient format woff2. If not — you will be penalized!

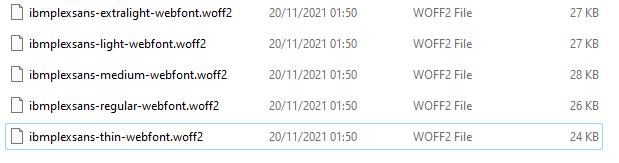

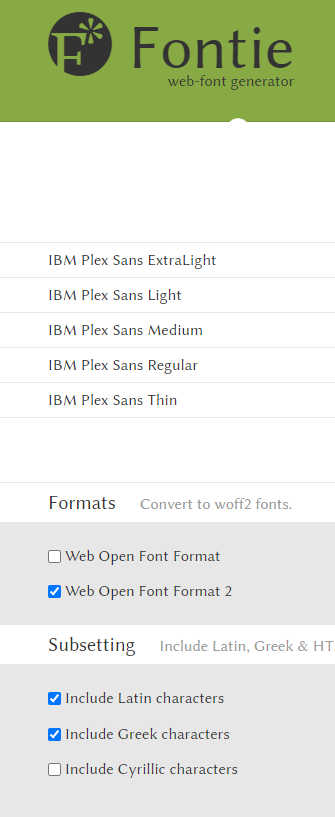

For example downloading your IBM Plex Sans Google fonts (as TTF) and select only the weights that are of use to your site:

Please note that different convertors to woff2 offer different compressions. So, coverting them to woff2, via Convertio will give you these sizes:

Whereas converting them via Fontie will give you these:

A near 50% saving — in my site this saves me about 300kb overall!

PS.: If you are using international character sets please make sure that you select the appropriate languages from the options as you don’t want to include arabic, cyrilic etc. in your Greek content.

PPS.: Talking about preloading and prefetching:

It is good practice to also add in your header preconnect and dns-prefetch commands like these to your calls to “external” services.

The absence of these directives — especially the dns-prefetch — is also heavily penalised (and rightly so)!<link rel=”preconnect” href=”https://static.cloudflareinsights.com/” >

<link rel=”dns-prefetch” href=”https://static.cloudflareinsights.com/” >

CSS/JScript

It is good to try to minify and combine in one file each. Creating these bundles of css and Jscript is elementary bootstrap. Obviously, you can use gulp — the same that you will for any bootstrap based theme such as metronic — if you plan not to change your theme anytime soon. But as the themes get updated every so often, it is good to either use plugins like autoptimize or facilities offered by the CDN service providers — I am going to talk more about this later.

In the case of the Newspaper theme they have (in Beta) their own CSS optimizer too! So their huge CSS (that covers for all possible theme functionality) is stripped from all irrelevant to your implementation directives. It has a few glitches but it works like a gem if you are not doing anything extreme.

4. Disconnect the dynamic page delivery from the static content delivery

Obviously, caching mechanisms do help with performance. There are great plugins such as WP Super Cache — by Automatic, the original makers of WordPress.

But here I am talking about something completely different: creating your own CDN inside your WordPress installation.

Why are we doing this? Because each file served comes with complimentary metadata that it are best to avoid.

For example, even images do carry some cookie information. So, it is good to strip them from this additional information. How do we do this?

Lets say that our site is hosted in domain.com

a. In the same server, we create a new website that we name it say static.domain2.com.

This new site points in the wp_content directory of the domain.com

Our domain.com will only serve the dynamic pages.

All the other content that it serves will go be through static.domain.com

b. We go to the wp_config.php of domain.com and we add these two lines:define(“WP_CONTENT_URL”, “https://static.domain2.com”);

define(“COOKIE_DOMAIN”, “domain.com”);

c. Then we go to the db of the domain.com and run the following query:UPDATE wp_posts SET post_content = REPLACE(post_content,’domain.com/wp-content/’,’static.domain2.com/’)

That’s all!

The WordPress engine is running on the domain.com site but all the files that are sent out are via a plain vanilla apache/nginx server at static.domain2.com

Why don’t we use a subdomain of our domain? Because the cookies set on the top-level domain, propagate in the subdomains as well, so the stripping does not actually work using the subdomain of a domain that already is serving cookies. You have to have a clean, cookie-free domain for that matter.

But did we do all this to just avoid a little bit of tracking/security mechanism implemented on the web — just because a few years ago some guys were using image files to encrypt -safely send private messages undetected by the security services?

No, we are doing it to achieve something far greater: Now we can tune our web servers (that look in the same content) to do, each, what they do best.

For example, for your static content you can configure your server to run on Apache whereas for your dynamic (php) serving webserver you can configure it to run on FastCGI Nginx — we are not going to argue about it, just take your pick. Let’s just agree that a webserver which will be optimised to serve php faster, will do well without having to also serve images, video etc. So you have the best of both worlds!

Last part of this is the creation of the CORS policy so that items such as fonts that are requested by the static domain can be served in our domain.

To do this you just have to add in the vhost file of the “static domain” has got (stripped out other MIMe types and alongside other headers):location ~ \.(woff|woff2|svg|ttf|eot|otf)$ {

add_header “Access-Control-Allow-Origin” “*”;

}

But on the domain.com you do something like this:location ~* \.(other|mime|types|here)$ {

…

if ($request_uri ~ ^[^?]*\.(ttf|ttc|otf|eot|woff|woff2|font.css)(\?|$)) {

…

add_header Access-Control-Allow-Origin “*”;

…

}

}

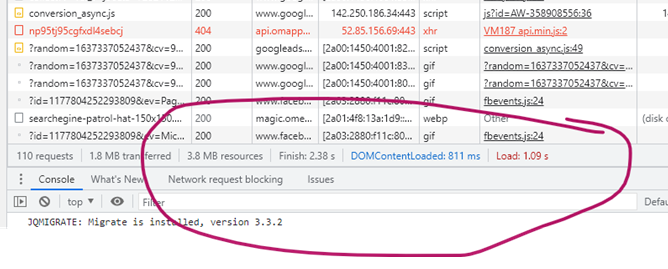

This also explains the story about the propagation of cookies in subdomains. If you are to set the Access-Control-Allow-Origin header to both the domain and its subdomain then the headers are bound to conflict and you will get a lovely message like this:

There is a trick here though: If you can afford to set your cookies on a subdomain such as www.domain.com, you can create another subdomain (e.g. static.domain.com) to host all of your static files which will no longer result in any cookies being sent.

So, the final version of your wp-config.php file ought to be like this:define(“WP_CONTENT_URL”, “https://static.domain.com”);

define(“COOKIE_DOMAIN”, “www.domain.com”);

5. Employ a CDN

In our case we started with the free version of Cloudflare, that also takes care of the DNS so that our name servers are now protected by DDoS attacks.

Then, we decided to upgrade to pro (for $20 per month regardless of usage) for additional services that help optimise further the experience. From serving lossless Webp files, to mimifying and combining CSS and JScript.

Subsequently we opted for the Argo service ($5 for the first 5GB and 0.1/GB after that) that offers additional speed benefits.

Please visit here for their catalogue.

Obviously, you can choose any CDN that you feel like, but Cloudflare is one of the most affordable. Many argue that the AKAMAI offers the best service — actually I happened to be the first paying customer of AKAMAI in my native Greece back in 2001 – but rest assured that our set up is beating the hell out of most AKAMAI clients that did not do the previous optimizations.

A footnote about the cookies: Yes, Cloudflare and any CDN does add cookies to each and every object that it serves; it is a security imperative. But this does not mean that it won’t perform better if we don’t have cookies in the content we serve to them to propagate! After all, your competitors may be using Cloudflare too and you can have a relatively better performance.

6. Tune your origin server to meet the new requirements

Having set up our CDN you may see that a few things may need to change.

For example, you may notice that when Cloudflare’s monster kit starts crawling your site for content after a purge, your server cannot handle the requests. There and then you may decide to change it from FastCGI to FPM (or even a Dedicated FPM) for added peace of mind.

It is the art and craft of tuning but you may want to play with these settings.

Also do not forget to add expiration headers in your .htaccess file as well as ensure compression. For example, in my case I added these entries:<ifModule mod_expires.c>

ExpiresActive On

ExpiresDefault “access plus 5 seconds”

ExpiresByType image/x-icon “access plus 1 year”

ExpiresByType image/jpeg “access plus 1 year”

ExpiresByType image/png “access plus 1 year”

ExpiresByType image/gif “access plus 1 year”

ExpiresByType image/webp “access plus 1 year”

ExpiresByType application/x-shockwave-flash “access plus 1 year”

ExpiresByType text/css “access plus 1 year”

ExpiresByType text/javascript “access plus 1 year”

ExpiresByType application/javascript “access plus 1 year”

ExpiresByType application/x-javascript “access plus 1 year”

ExpiresByType font/truetype “access plus 1 year”

ExpiresByType font/opentype “access plus 1 year”

ExpiresByType application/x-font-woff “access plus 1 year”

ExpiresByType application/font-woff2 “access plus 1 year”

ExpiresByType application/x-font-woff2 “access plus 1 year”

ExpiresByType image/svg+xml “access plus 1 year”

ExpiresByType application/vnd.ms-fontobject “access plus 1 year”

ExpiresByType text/html “access plus 1 year”

ExpiresByType application/xhtml+xml “access plus 1 year”

</ifModule># END Expire headers<IfModule mod_deflate.c>

<filesMatch “\.(js|css|woff|woff2|html|php)$”>

SetOutputFilter DEFLATE

</filesMatch>

</IfModule>

Talking about compression: In Solarwinds’ Pingdom you will, erroneously, get a message advising you to “Compress components with gzip”. It is a bug as it expects a particular type of gzip.

Cloudflare utilises a better compression algorithm (Brotli) that goes undetected by Solarwinds.

You may wonder why we have to do this in our origin server since our CDN will look after it?

Well, we do this because:

1. it is good to act as if there is no CDN

2. understand that the CDN is just our “preferred end user” and we have to help it consume our information as best it can!

3. it gives us an advantage over our competitors who also use CDN

7. Test for performance and finetune the site

First of all use different tools.

The recommended tools are:

https://gtmetrix.com/ by GtMetrix

https://pagespeed.web.dev/ by Google

https://tools.pingdom.com/ by Solarwinds

https://www.webpagetest.org/ by Catchpoint

Thereafter you start investigating the good, the bad and the ugly you observe in each of these tools.

Obviously, Google’s own tool has a lot more gravitas and it is one of the strictest tools you can get. Solarwinds also use the Lighthouse engine that power’s Google’s.

This video by Google in the recent November 2021 Chrome Dev Summit can also be of help to you in figuring out the current trends.

Conclusion

Most of the information shared here was an outcome of the results we used to get from the various tools. For example, some tools penalize you for having small content expiration in the headers. So you go to your server (or to your CDN panel) and provide these headers. We noticed for example that all tools will penalize you if your images have a month’s expiration. Increasing it to one year removes the warnings by all of them.

A couple of things about how Google treats mobile: Google seems to prefer the AMP engine. Providing a rich, responsive site on mobile is heavily penalized. Somehow, they think as if we are using mobiles not on high speed wi-fi but on GSM/3G networks. As we move to 5G one expects that this (rather unfair) penalty on experience richness will be reduced.

Edward, a prominent user in tagDiv’s forum, informed us that:

One of the largest Western publications in the field of search marketing, SearchEngineLand, has decided to abandon AMP pages on its website .

In June, Google announced that the use of AMP would cease to be a requirement for including content in the “Top News”. This gave another reason to discuss whether it is worth continuing to support this technology.

Thankfully, most themes nowadays do offer the choice to be integrated with AMP, or have a responsive theme (based on the desktop version) or, even, create a mobile theme that takes the content from the desktop version but allows to optimize the code so that it is not based on “hiding” the DOM elements of the desktop version but creating a fully mobile optimised template.

The users of the Newspaper theme by TagDiv will do well to watch this video.

I have to admit that this is work in progress for me as well (making our site top the charts in mobile delivery without using the AMP backbone).

Special mention: The Google Ads factor!

If you site serves ads by Google’s own engine expect to lose about 20 points in the speed ranking!

If you are using their auto-placement functionality your structure ranking will suffer as well.

Even Google’s own site performance tool, penalises you for including their code:

For example, we also scored 93–98 in Google’s own PageSpeed Insights:

Yet when we placed (with auto placement I admit) Google Ad Sense code to start serving ads, the score went down to 83.

For that matter I will revert with another article, as we are still experimenting. Obviously, it makes sense not to use the auto placement but just create spaces in your pages, so that the rendering of the page is not impacted by the injection of the ads in your pages.

Honestly, the principal value this article has is that it touches in so many different aspects of performance that usually large companies have different departments to deal with, while some “details” such as font sizes go usually unnoticed. This is to their undoing I have to say, as, say, the sysadmins will fight against the recommendations of developers and vice versa. So, its main purpose was to just be a manual for the orchestration of the necessary parameters that you have to take into account, when talking to your… favourite developer and/or sysadmin and/or digital designer and/or hosting support technician.